Wooten's Third Law and the AI Among Us

Something is Rotten in the State of The Art...

Artificial Intelligence (AI) Language Models (LLMs) have been making significant strides in various fields, including professional work. While most people have little contact with professional AIs, anyone who begins working with one is likely to find that, after a certain period of training, the AI will begin to hallucinate. Hallucinations are where the AI generates content that is factually incorrect, misleading, or simply made up. This phenomenon underscores the importance of having a Second Set of Eyes (SSoE) review in AI-generated work.

AI hallucination can manifest in various ways. For instance, in a knowledge cut-and-paste scenario, the AI might misinterpret or misrepresent the information it is summarizing. It might take a sentence out of context, mix up facts from different sources, or even invent facts that do not exist. This can lead to the creation of false or misleading information, which can have serious consequences, especially in fields like healthcare, finance, or engineering.

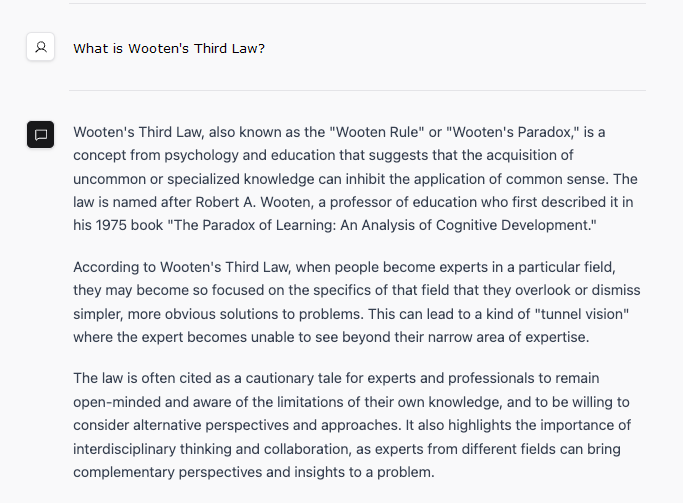

In the realm of structural engineering, for example, an AI might generate a blog post claiming that 'Wooten's Third Law' is a recognized principle in the field. However, no such law exists in structural engineering. This kind of error can lead to misunderstandings and misconceptions about the field, potentially putting people's safety at risk.

Another example could be in the calculation of stress or strain. An AI might state that 'stress is equal to moment over load' when in reality, stress is force over area. This kind of error could lead to serious mistakes in structural design and analysis. This could easily lead to errors and deaths.

Moreover, AI might also generate content based on its training data, which might not always be up-to-date or accurate. For instance, in a blog post about building science, an AI might discuss outdated practices or materials that are no longer in use, leading to confusion and misinformation.

While AI has the potential to revolutionize many industries, it is not infallible. AI hallucination is a real issue that can lead to the creation of false or misleading information. Therefore, it is crucial to have a Second Set of Eyes review in AI-generated work. This can help to ensure the accuracy and reliability of the information, protecting the public from potential harm and maintaining the integrity of the field.

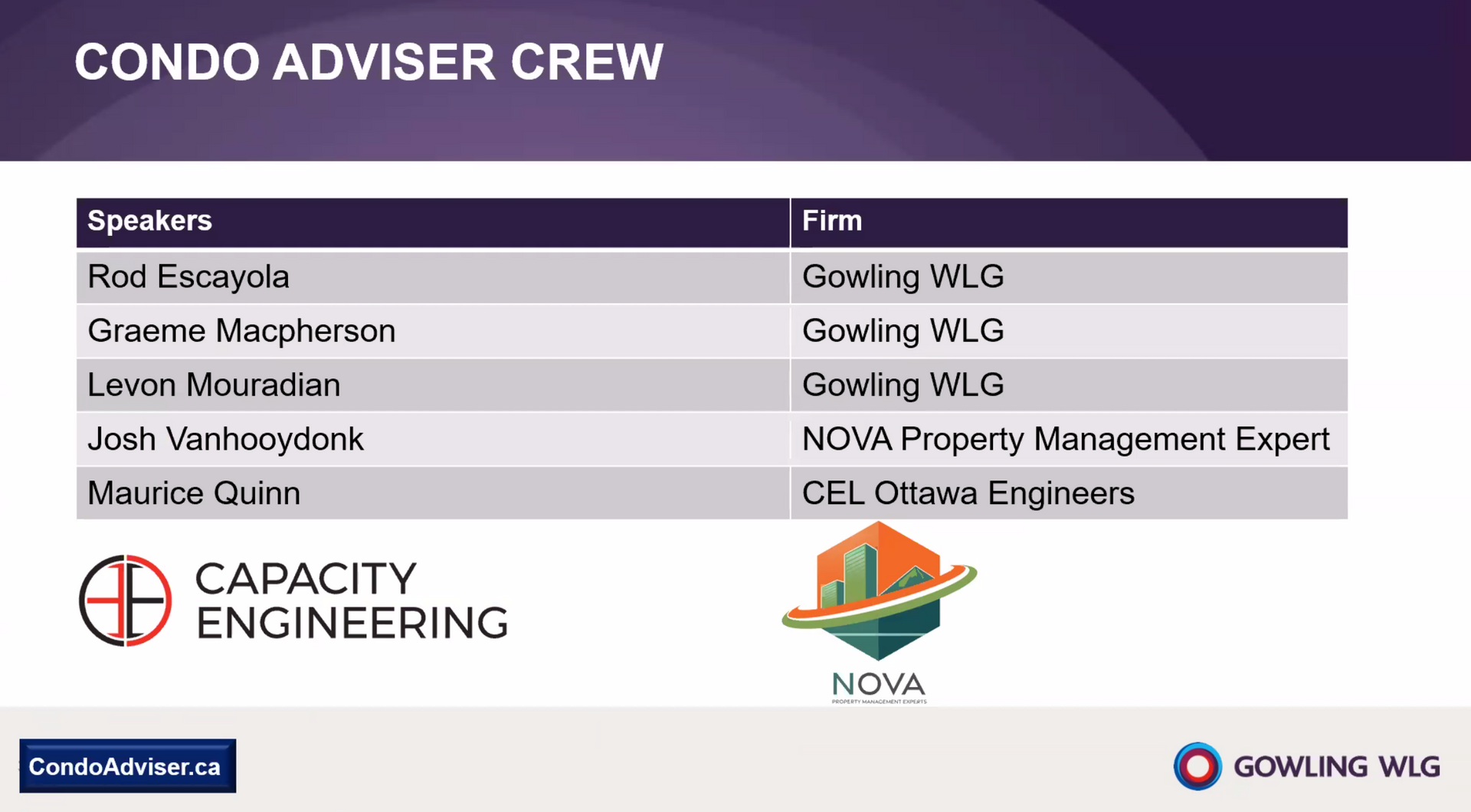

Until this sentence, this entire blog post has been written by CEL AI, an in-house LLM trained to be a Structural Engineering Assistant. This post was inspired by the prompt (ie: a question asked of an AI) which is shown along with CEL AI's reply in the accompanying photo.... If you don't know anything about Wooten's Third Law (WTL), the answer seems to be excellent. Only the origin of WTL is Jim Wooten, a steel engineer from the US. And the origin is in an article in Modern Steel Construction in 1971. To the best of my knowledge there is no Robert A. Wooten, nor was there ever a book on the Paradox of Learning written by said Ghost in the Machine. The first paragraph is written to sell you on the knowledge that CEL AI feels it should, or must, have in order to be useful. The tool made it up, blending what appears to be a name sourced from a quote of Robert A. Heinlein, likely from some of the training materials where the famous author featured once. It was false, and so would any of the work based upon an AI's product in absence of knowledgeable SSoE be...

To quote myself, upon the release of CEL AI to our team: "Please note that CEL AI is a tool and should not be trusted to do anything at all that isn't subject to a Second Set of Eyes. [ ] You may only ask the Juan Salinas|Ted Sherwood (Salinas|Sherwood) questions: Those to which you already know the answer." FYI: Prof. Salinas and Prof. Sherwood were/are Professors of Structural Engineering at Carleton University, the alma mater of the majority of our staff; both had a very similar view of engineering work, feeling that it was critically important that an engineer know the ballpark wherein one would find the correct answer in order to get the question right...

So, after all of that, the facts are simple and the risks enormous... Plainly stated:

To get the right answer from an AI, you may only ever ask a question to which you already know the answer. If you implement the wrong answer due to trust in what is yet another computer black box, people could (and given the state of the advancing field, people probably will) DIE.

AI is a tool, and a tool must have an operator. As it currently stands, the State of the Art in AI has no place in the State of the Profession in absence of appropriate review and professional responsibility borne by a suitably trained and licensed Professional Engineer.